Introduction

Hi guys,

Welcome to the 8th part of the Hypervisor From Scratch. If you reach here, then you probably finished reading the 7th part, and personally, I believe the 7th part was the most challenging part to understand so hats off, you did a great job.

The 8th part would be an exciting part as we’ll see lots of real-world and practical examples of solving reverse-engineering related problems with hypervisors. For example, we’ll see how hidden hooks work in the presence of hypervisor or how to create a syscall hook, and we’re eventually able to transfer messages from vmx root to OS (vmx non-root) and then into user-mode thus it gives us a valuable bunch of information about how the system works.

Besides some OS-related concepts, we’ll also see some CPU related topics like VPIDs and some general information about how patches for Meltdown and Spectre works.

Event injection, Exception Bitmap, and also adding support to virtualize a hyper-v machine are other titles that will be discussed.

Before starting, I should give special thanks to my friend Petr Benes for his contributions to Hypervisor From Scratch, of course, Hypervisor From Scratch could never have existed without his help and to Liran Alon for great helps on fixing VPID problem, and to Gerhart for his in-depth knowledge about Hyper-V internals that makes Hypervisor From Scratch available for Hyper-V.

Overview

This part is divided into eight main sections :

- How to inject interrupts (Event) into the guest and Exception Bitmap

- Implementing hidden hooks using EPT

- Syscall hook

- Invalidating EPT caches using VPID

- Demonstrating a custom VMX Root-mode compatible message tracing mechanism and adding WPP Tracing to our Hypervisor

- We’ll add support to Hyper-V

- Fixing some previous design caveats

- Discussion (In this section we discuss the different question and approaches about various topics in this part)

The full source code of this tutorial is available on GitHub :

[https://github.com/SinaKarvandi/Hypervisor-From-Scratch]

Table of Contents

- Introduction

- Overview

- Table of Contents

Event Injection

- Vectored Events

- Interrupts

- Exceptions

- Exception Classifications

- Event Injection Fields

- Vectored Event Injection

- Exception Error Codes

- Vectored Events

- Exception Bitmap

- Monitor Trap Flag (MTF)

Hidden Hooks (Simulating Hardware Debug Registers Without Any Limitation)

- Hidden Hooks Scenarios for Read/Write and Execute

Implementing Hidden Hooks

Removing Hooks From Pages

- An Important Note When Modifying EPT Entries

- System-Call Hook

- Finding Kernel Base

- Finding SSDT and Shadow SSDT Tables

- Get Routine Address by Syscall Number

- Virtual Processor ID (VPID) & TLB

- INVVPID - Invalidate Translations Based on VPID

- Individual-address invalidation

- Single-context invalidation

- All-contexts invalidation

- Single-context invalidation, retaining global translations

- Important Notes For Using VPIDs

- INVVPID vs. INVPCID

- INVVPID - Invalidate Translations Based on VPID

- Designing A VMX Root-mode Compatible Message Tracing

- Concepts

- What’s a spinlock?

- Test-and-Set

- What do we mean by “Safe”?

- What is DPC?

- Challenges

- Designing A Spinlock

- Message Tracer Design

- Initialization Phase

- Sending Phase (Saving Buffer and adding them to pools)

- Reading Phase (Read buffers and send them to user-mode)

- Checking for new messages

- Sending messages to pools

- Receiving buffers and messages in user-mode

- IOCTL and managing user-mode requests

- User-mode notify callback

- Uninitialization Phase

- Concepts

- WPP Tracing

Supporting to Hyper-V

Enable Nested Virtualization

Hyper-V’s visible behavior in nested virtualization

Hyper-V Hypervisor Top-Level Functional Specification (TLFS)

Out of Range MSRs

Hyper-V Hypercalls (VMCALLs)

Hyper-V Interface CPUID Leaves

- Fixing Previous Design Issues

- Fixing the problem with pre-allocated buffers

- Avoid Intercepting Accesses to CR3

- Restoring IDTR, GDTR, GS Base and FS Base

- Let’s Test it!

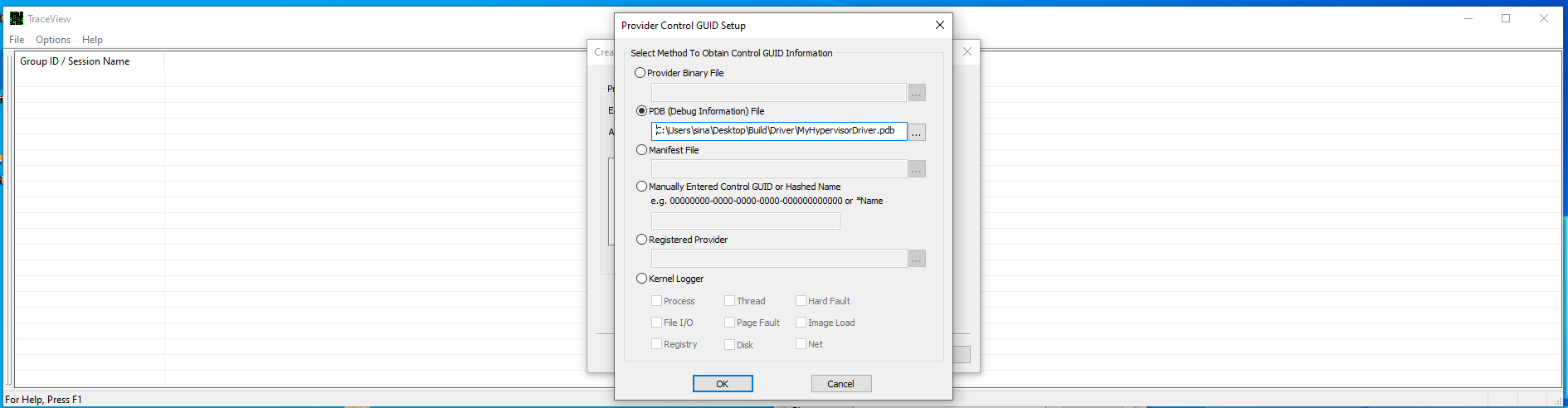

- View WPP Tracing Messages

- How to test?

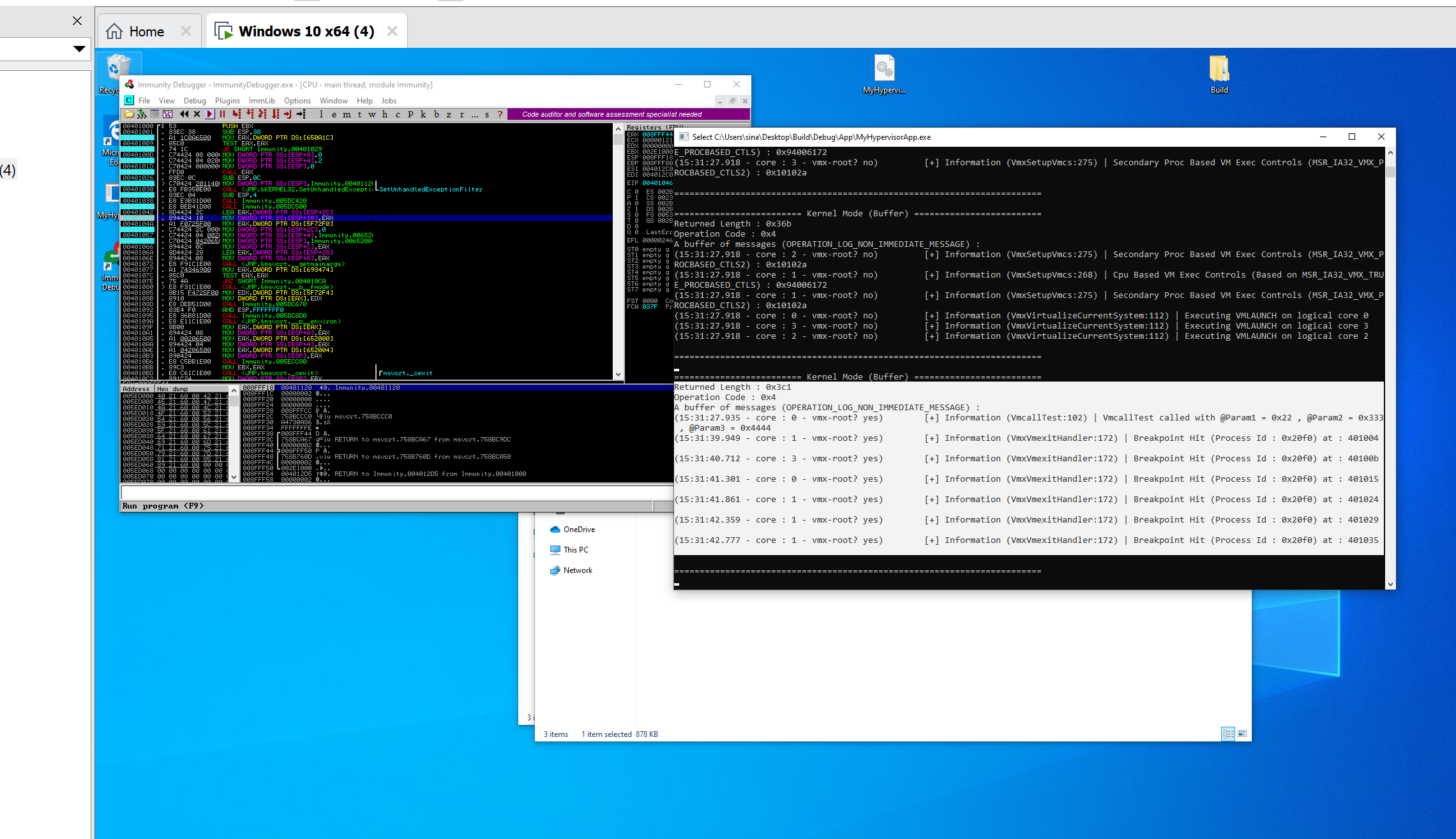

- Event Injection & Exception Bitmap Demo

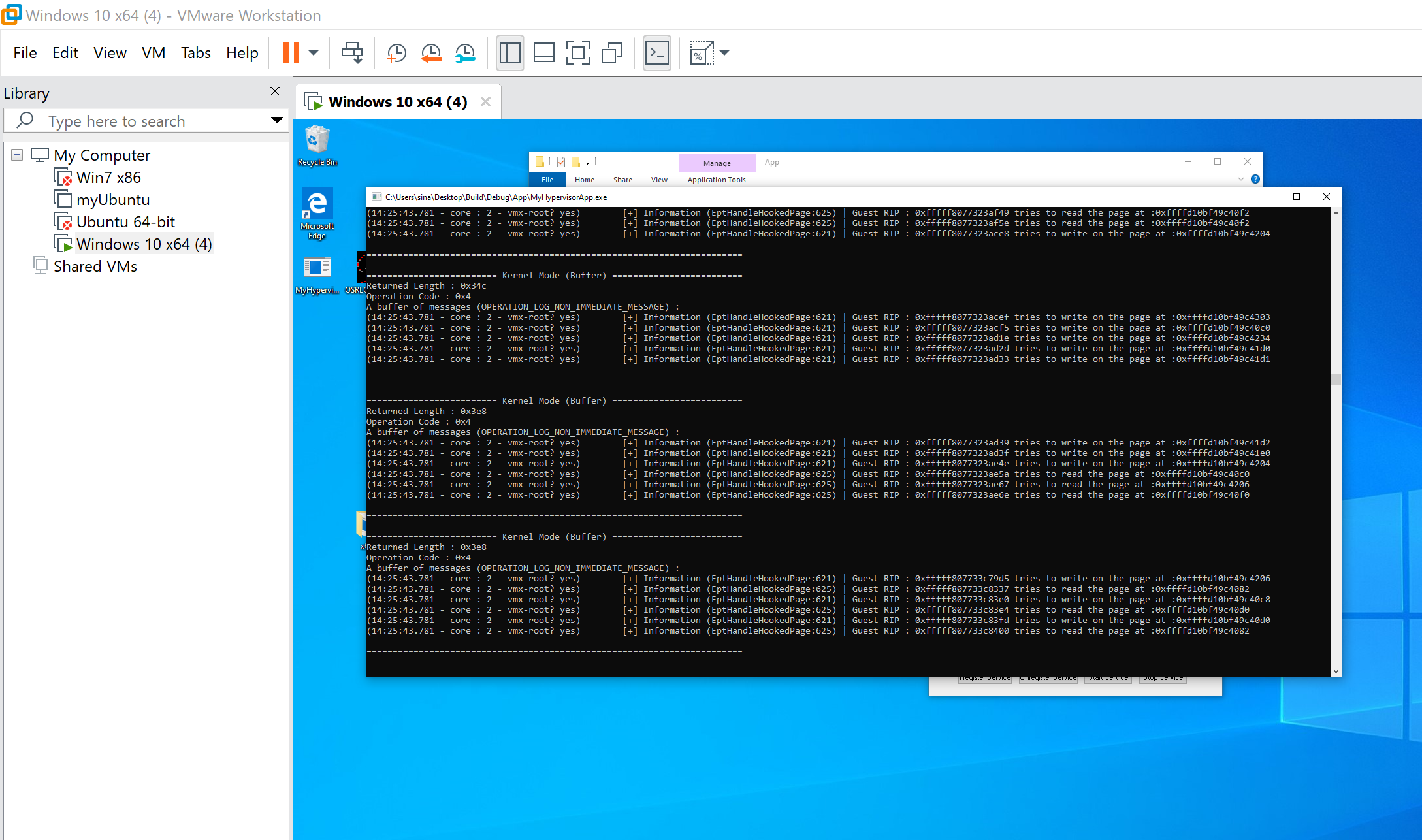

- Hidden Hooks Demo

- Read/Write Hooks or Hardware Debug Registers Simulation

- Hidden Execution Hook

- Syscall Hook Demo

- Discussion

- Conclusion

- References

Event Injection

One of the essential parts of the hypervisors is the ability to inject events (events are Interrupts, Exceptions, NMIs, and SMIs) as if they’ve arrived normally, and the capability to monitor received interrupts and exceptions.

This gives us a great ability to manage the guest operating system and unique ability to build applications, For example, if you are developing anti-cheat application, you can easily disable breakpoint and trap interrupts, and it completely disables all the features of Windbg or any other debugger as you’re the first one that is being notified about the breakpoint thus you can decide to abort the breakpoint or give it to the debugger.

This is just a simple example that the attacker needs to find a way around it. You can also use event injections for reverse-engineering purposes, e.g., directly inject a breakpoint into an application that uses different anti-debugging techniques to make its code hidden.

We can also implement some important features of our hypervisor like hidden hooks based on relying on event injection.

Before going deep into the Event Injection, we need to know some basic processor concepts and terms used by Intel. Most of them derived from this post and this answer.

Intel x86 defines two overlapping categories, vectored events (interrupts vs exceptions), and exception classes (faults vs traps vs aborts).

Vectored Events

Vectored Events (interrupts and exceptions) cause the processor to jump into an interrupt handler after saving much of the processor’s state (enough such that execution can continue from that point later).

Exceptions and interrupts have an ID, called a vector, that determines which interrupt handler the processor jumps to. Interrupt handlers are described within the Interrupt Descriptor Table (IDT).

Interrupts

Interrupts occur at random times during the execution of a program, in response to signals from the hardware. System hardware uses interrupts to handle events external to the processor, such as requests to service peripheral devices. The software can also generate interrupts by executing the INT n instruction.

Exceptions

Exceptions occur when the processor detects an error condition while executing an instruction, such as division by zero. The processor identifies a variety of error conditions, including protection violations, page faults, and internal machine faults.

Exception Classifications

Exceptions classified as faults, traps, or aborts depending on the way they reported and whether the instruction that caused the exception could be restarted without loss of program or task continuity.

In summary: traps increment the instruction pointer (RIP), faults do not, and aborts ’explode’.

We’ll start with the fault classification. You’ve probably heard of things called page faults (or segmentation faults if you’re from the past).

A fault is just an exception type that can be corrected and allows the processor the ability to execute some fault handler to rectify an offending operation without terminating the entire operation. When a fault occurs, the system state is reverted to an earlier state before the faulting operation occurred, and the fault handler is called. After executing the fault handler, the processor returns to the faulting instruction to execute it again. That last sentence is important because that means it redoes an instruction execution to make sure the proper results are used in the following operations. This is different from how a trap is handled.

A trap is an exception that is delivered immediately following the execution of a trapping instruction. In our hypervisor, we trap on various instructions, meaning that after the execution of an instruction – say rdtsc or rdtscp – a trap exception is reported to the processor. Once a trap exception is reported, control is passed to a trap handler, which will perform some operation(s). Following the execution of the trap handler, the processor returns to the instruction following the trapping instruction.

An abort, however, is an exception that occurs and doesn’t always yield the location of the error. Aborts are commonly used for reporting hardware errors, or otherwise. You won’t see these very often, and if you do… Well, you’re doing something wrong. It’s important to know that all exceptions are reported on an instruction boundary – excluding aborts. An instruction boundary is quite simple: if you have the bytes 0F 31 48 C1 E2 20 which translates to the instructions,

1

2

rdtsc

shl rdx, 20h

Then the instruction boundary would be between the bytes 31 and 48. That’s because 0F 31 is the instruction opcodes for rdtsc. This way, two instructions separated by a boundary.

Event Injection Fields

Event injection is done with using interruption-information field of VMCS.

The interruption-information is written into the VM-entry fields of the VMCS during VM-entry; after all the guest context has been loaded, including MSRs and Registers, it delivers the exception through the Interrupt Descriptor Table (IDT) using the vector specified in this field.

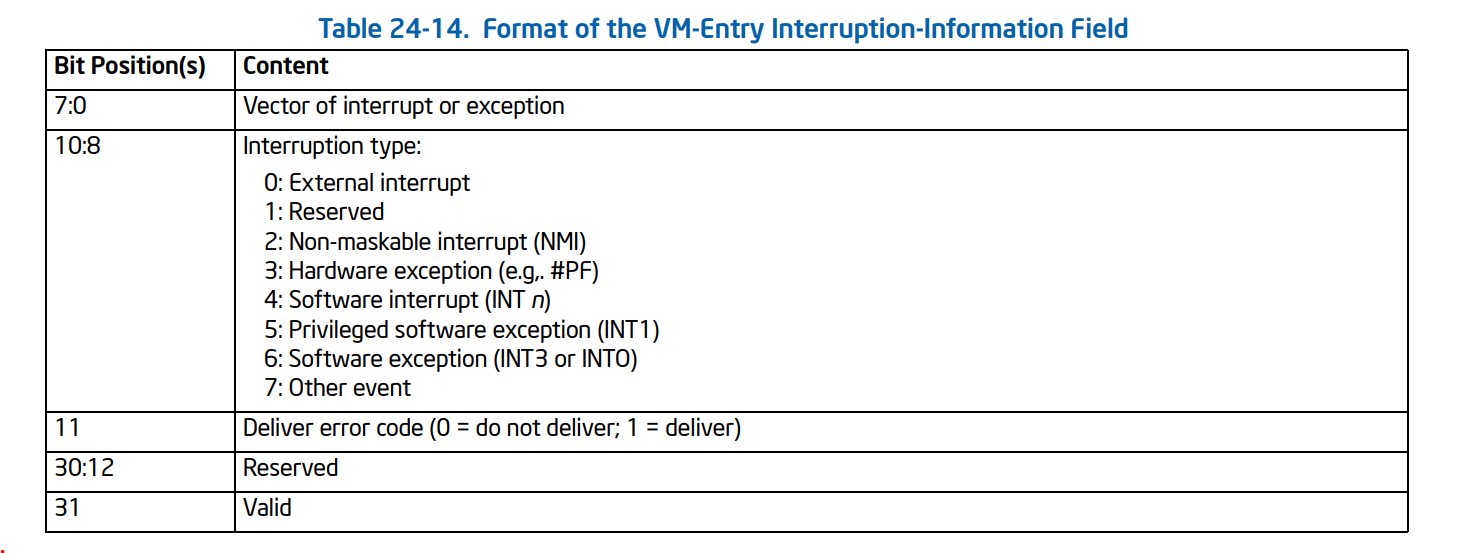

The first field to configure event injection is VM-entry interruption-information field (32 bits) or VM_ENTRY_INTR_INFO in the VMCS, this field provides details about the event to be injected.

The following picture shows the detail of each bit.

- The vector (bits 7:0) determines which entry in the IDT is used or which other event is injected or, in other words, it defines the index of Interrupt to be injected in IDT, for example, the following command (!idt) in windbg shows the IDT indexes. (note that the index is the numbers at the left).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

lkd> !idt

Dumping IDT: fffff8012c05b000

00: fffff80126551100 nt!KiDivideErrorFaultShadow

01: fffff80126551180 nt!KiDebugTrapOrFaultShadow Stack = 0xFFFFF8012C05F9D0

02: fffff80126551200 nt!KiNmiInterruptShadow Stack = 0xFFFFF8012C05F7D0

03: fffff80126551280 nt!KiBreakpointTrapShadow

04: fffff80126551300 nt!KiOverflowTrapShadow

05: fffff80126551380 nt!KiBoundFaultShadow

06: fffff80126551400 nt!KiInvalidOpcodeFaultShadow

07: fffff80126551480 nt!KiNpxNotAvailableFaultShadow

08: fffff80126551500 nt!KiDoubleFaultAbortShadow Stack = 0xFFFFF8012C05F3D0

09: fffff80126551580 nt!KiNpxSegmentOverrunAbortShadow

0a: fffff80126551600 nt!KiInvalidTssFaultShadow

0b: fffff80126551680 nt!KiSegmentNotPresentFaultShadow

0c: fffff80126551700 nt!KiStackFaultShadow

0d: fffff80126551780 nt!KiGeneralProtectionFaultShadow

0e: fffff80126551800 nt!KiPageFaultShadow

10: fffff80126551880 nt!KiFloatingErrorFaultShadow

11: fffff80126551900 nt!KiAlignmentFaultShadow

12: fffff80126551980 nt!KiMcheckAbortShadow Stack = 0xFFFFF8012C05F5D0

13: fffff80126551a80 nt!KiXmmExceptionShadow

14: fffff80126551b00 nt!KiVirtualizationExceptionShadow

15: fffff80126551b80 nt!KiControlProtectionFaultShadow

1f: fffff80126551c00 nt!KiApcInterruptShadow

20: fffff80126551c80 nt!KiSwInterruptShadow

29: fffff80126551d00 nt!KiRaiseSecurityCheckFailureShadow

2c: fffff80126551d80 nt!KiRaiseAssertionShadow

2d: fffff80126551e00 nt!KiDebugServiceTrapShadow

2f: fffff80126551f00 nt!KiDpcInterruptShadow

30: fffff80126551f80 nt!KiHvInterruptShadow

31: fffff80126552000 nt!KiVmbusInterrupt0Shadow

32: fffff80126552080 nt!KiVmbusInterrupt1Shadow

33: fffff80126552100 nt!KiVmbusInterrupt2Shadow

34: fffff80126552180 nt!KiVmbusInterrupt3Shadow

...

The interruption type (bits 10:8) determines details of how the injection is performed.

In general, a VMM should use the type hardware exception for all exceptions other than the following:

- Breakpoint exceptions (#BP): a VMM should use the type software exception.

- Overflow exceptions (#OF): a VMM should use the use type software exception.

- Those debug exceptions (#DB) that are generated by INT1 (a VMM should use the use type privileged software exception).

For exceptions, the deliver-error-code bit (bit 11) determines whether delivery pushes an error code on

the guest stack. (we’ll talk about error-code later)

The last bit is that VM entry injects an event if and only if the valid bit (bit 31) is 1. The valid bit in this field is cleared on every VM exit means that when you want to inject an event, you set this bit to inject your interrupt and the processor will automatically clear it at the next VM-Exit.

The second field that controls the event injection is VM-entry exception error code.

VM-entry exception error code (32 bits) or VM_ENTRY_EXCEPTION_ERROR_CODE in the VMCS: This field is used if and only if the valid bit (bit 31) and the deliver error-code bit (bit 11) are both set in the VM-entry interruption-information field.

The third field that controls the event injection is VM-entry instruction length.

VM-entry instruction length (32 bits) or VM_ENTRY_INSTRUCTION_LEN in the VMCS: For injection of events whose type is a software interrupt, software exception, or privileged software exception, this field is used to determine the value of RIP that is pushed on the stack.

All in all, these things in VMCS control the Event Injection process: VM_ENTRY_INTR_INFO, VM_ENTRY_EXCEPTION_ERROR_CODE, VM_ENTRY_INSTRUCTION_LEN.

Vectored Event Injection

If the valid bit in the VM-entry interruption-information field is 1, VM entry causes an event to be delivered (or made pending) after all components of the guest state have been loaded (including MSRs) and after the VM-execution control fields have been established.

The interruption type (which is described above) can be one of the following values.

1

2

3

4

5

6

7

8

9

10

11

enum _INTERRUPT_TYPE

{

INTERRUPT_TYPE_EXTERNAL_INTERRUPT = 0,

INTERRUPT_TYPE_RESERVED = 1,

INTERRUPT_TYPE_NMI = 2,

INTERRUPT_TYPE_HARDWARE_EXCEPTION = 3,

INTERRUPT_TYPE_SOFTWARE_INTERRUPT = 4,

INTERRUPT_TYPE_PRIVILEGED_SOFTWARE_INTERRUPT = 5,

INTERRUPT_TYPE_SOFTWARE_EXCEPTION = 6,

INTERRUPT_TYPE_OTHER_EVENT = 7

};

Now it’s time to set the vector bit. The following enum is the representation of the indexes in IDT. (Look at the indexes of !idt command above).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

typedef enum _EXCEPTION_VECTORS

{

EXCEPTION_VECTOR_DIVIDE_ERROR,

EXCEPTION_VECTOR_DEBUG_BREAKPOINT,

EXCEPTION_VECTOR_NMI,

EXCEPTION_VECTOR_BREAKPOINT,

EXCEPTION_VECTOR_OVERFLOW,

EXCEPTION_VECTOR_BOUND_RANGE_EXCEEDED,

EXCEPTION_VECTOR_UNDEFINED_OPCODE,

EXCEPTION_VECTOR_NO_MATH_COPROCESSOR,

EXCEPTION_VECTOR_DOUBLE_FAULT,

EXCEPTION_VECTOR_RESERVED0,

EXCEPTION_VECTOR_INVALID_TASK_SEGMENT_SELECTOR,

EXCEPTION_VECTOR_SEGMENT_NOT_PRESENT,

EXCEPTION_VECTOR_STACK_SEGMENT_FAULT,

EXCEPTION_VECTOR_GENERAL_PROTECTION_FAULT,

EXCEPTION_VECTOR_PAGE_FAULT,

EXCEPTION_VECTOR_RESERVED1,

EXCEPTION_VECTOR_MATH_FAULT,

EXCEPTION_VECTOR_ALIGNMENT_CHECK,

EXCEPTION_VECTOR_MACHINE_CHECK,

EXCEPTION_VECTOR_SIMD_FLOATING_POINT_NUMERIC_ERROR,

EXCEPTION_VECTOR_VIRTUAL_EXCEPTION,

EXCEPTION_VECTOR_RESERVED2,

EXCEPTION_VECTOR_RESERVED3,

EXCEPTION_VECTOR_RESERVED4,

EXCEPTION_VECTOR_RESERVED5,

EXCEPTION_VECTOR_RESERVED6,

EXCEPTION_VECTOR_RESERVED7,

EXCEPTION_VECTOR_RESERVED8,

EXCEPTION_VECTOR_RESERVED9,

EXCEPTION_VECTOR_RESERVED10,

EXCEPTION_VECTOR_RESERVED11,

EXCEPTION_VECTOR_RESERVED12

};

In general, the event is delivered as if it had been generated normally, and the event is delivered using the vector in that field to select a descriptor in the IDT. Since event injection occurs after loading IDTR (IDT Register) from the guest-state area, this is the guest IDT, or in other words, the event is delivered to GUEST_IDTR_BASE and GUEST_IDTR_LIMIT.

Putting the above descriptions into the implementation, we have the following function :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

// Injects interruption to a guest

VOID EventInjectInterruption(INTERRUPT_TYPE InterruptionType, EXCEPTION_VECTORS Vector, BOOLEAN DeliverErrorCode, ULONG32 ErrorCode)

{

INTERRUPT_INFO Inject = { 0 };

Inject.Valid = TRUE;

Inject.InterruptType = InterruptionType;

Inject.Vector = Vector;

Inject.DeliverCode = DeliverErrorCode;

__vmx_vmwrite(VM_ENTRY_INTR_INFO, Inject.Flags);

if (DeliverErrorCode) {

__vmx_vmwrite(VM_ENTRY_EXCEPTION_ERROR_CODE, ErrorCode);

}

}

As an example we want to inject a #BP (breakpoint) into the guest, we can use the following code :

1

2

3

4

5

6

7

8

/* Inject #BP to the guest (Event Injection) */

VOID EventInjectBreakpoint()

{

EventInjectInterruption(INTERRUPT_TYPE_SOFTWARE_EXCEPTION, EXCEPTION_VECTOR_BREAKPOINT, FALSE, 0);

UINT32 ExitInstrLength;

__vmx_vmread(VM_EXIT_INSTRUCTION_LEN, &ExitInstrLength);

__vmx_vmwrite(VM_ENTRY_INSTRUCTION_LEN, ExitInstrLength);

}

Or if we want to inject a #GP(0) or general protection fault with error code 0 then we use the following code:

1

2

3

4

5

6

7

8

/* Inject #GP to the guest (Event Injection) */

VOID EventInjectGeneralProtection()

{

EventInjectInterruption(INTERRUPT_TYPE_HARDWARE_EXCEPTION, EXCEPTION_VECTOR_GENERAL_PROTECTION_FAULT, TRUE, 0);

UINT32 ExitInstrLength;

__vmx_vmread(VM_EXIT_INSTRUCTION_LEN, &ExitInstrLength);

__vmx_vmwrite(VM_ENTRY_INSTRUCTION_LEN, ExitInstrLength);

}

You can write functions for other types of interrupts and exceptions. The only thing that you should consider is the InterruptionType, which is always hardware exception except for #DP, #BP, #OF, which is discussed above.

Exception Error Codes

You might notice that we used VM_ENTRY_EXCEPTION_ERROR_CODE in the VMCS and 11th bit of the interruption-information field, and for some exceptions, we disabled them while for some others we set them to a specific value, so what’s that error codes?

Some exceptions will push a 32-bit “error code” on to the top of the stack, which provides additional information about the error. This value must be pulled from the stack before returning control back to the currently running program. (i.e., before calling IRET for returning from interrupt).

The fact that the error code must be pulled from the stack makes event injection more complicated as we have to make sure whether the Windows tries to pull error code from the stack or not, as it turns to error if we put something onto the stack that Windows doesn’t expect to pull it later or we didn’t push anything but Windows thoughts there is something in the stack that needs to be pulled.

The following table shows some of these exceptions with the presence or absence of Error code, this table is derived from Intel SDM, Volume 1, CHAPTER 6 (Table 6-1. Exceptions and Interrupts).

| Name | Vector nr. | Type | Mnemonic | Error code? |

|---|---|---|---|---|

| Divide-by-zero Error | 0 (0x0) | Fault | #DE | No |

| Debug | 1 (0x1) | Fault/Trap | #DB | No |

| Non-maskable Interrupt | 2 (0x2) | Interrupt | - | No |

| Breakpoint | 3 (0x3) | Trap | #BP | No |

| Overflow | 4 (0x4) | Trap | #OF | No |

| Bound Range Exceeded | 5 (0x5) | Fault | #BR | No |

| Invalid Opcode | 6 (0x6) | Fault | #UD | No |

| Device Not Available | 7 (0x7) | Fault | #NM | No |

| Double Fault | 8 (0x8) | Abort | #DF | Yes (Zero) |

| Coprocessor Segment Overrun | 9 (0x9) | Fault | - | No |

| Invalid TSS | 10 (0xA) | Fault | #TS | Yes |

| Segment Not Present | 11 (0xB) | Fault | #NP | Yes |

| Stack-Segment Fault | 12 (0xC) | Fault | #SS | Yes |

| General Protection Fault | 13 (0xD) | Fault | #GP | Yes |

| Page Fault | 14 (0xE) | Fault | #PF | Yes |

| Reserved | 15 (0xF) | - | - | No |

| x87 Floating-Point Exception | 16 (0x10) | Fault | #MF | No |

| Alignment Check | 17 (0x11) | Fault | #AC | Yes |

| Machine Check | 18 (0x12) | Abort | #MC | No |

| SIMD Floating-Point Exception | 19 (0x13) | Fault | #XM/#XF | No |

| Virtualization Exception | 20 (0x14) | Fault | #VE | No |

| Reserved | 21-29 (0x15-0x1D) | - | - | No |

| Security Exception | 30 (0x1E) | - | #SX | Yes |

| Reserved | 31 (0x1F) | - | - | No |

| Triple Fault | - | - | - | No |

| FPU Error Interrupt | IRQ 13 | Interrupt | #FERR | No |

Now that we learn how to create a new events, it’s time to see how to monitor system interrupts.

Exception Bitmap

If you remember from MSR Bitmaps, we have a mask for each MSR that shows whether the read or write on that MSR should cause a vm-exit or not.

The monitoring of exceptions uses the same method, which means that a simple mask governs it. This mask is EXCEPTION_BITMAP in VMCS.

The exception bitmap is a 32-bit field that contains one bit for each exception. When an exception occurs, its vector is used to select a bit in this field. If the bit is 1, the exception causes a VM exit. If the bit is 0, the exception is delivered normally through the IDT.

Now it’s up to you to decide whether you want to inject that exception back to the guest or change the state or whatever you want to do.

For example, if you set the 3rd bit of the EXCEPTION_BITMAP, then whenever a breakpoint occurs somewhere (both user-mode and kernel-mode), a vm-exit with EXIT_REASON_EXCEPTION_NMI (exit reason == 0) occurs.

1

2

// Set exception bitmap to hook division by zero (bit 1 of EXCEPTION_BITMAP)

__vmx_vmwrite(EXCEPTION_BITMAP, 0x8); // breakpoint 3nd bit

Now we can change the state of the program, then resume the guest, remember resuming the guest doesn’t cause the exception to be delivered to the guest, we have to inject an event manually if we want that the guest process the event normally. For example, we can use the function “EventInjectBreakpoint,” as mentioned earlier, to inject the exception back to the guest.

The last question is how we can find the index of exception that occurred, you know we might set exception bitmap for multiple exceptions, so we have to know the exact reason why this vm-exit happened or more clearly, what exception causes this vm-exit.

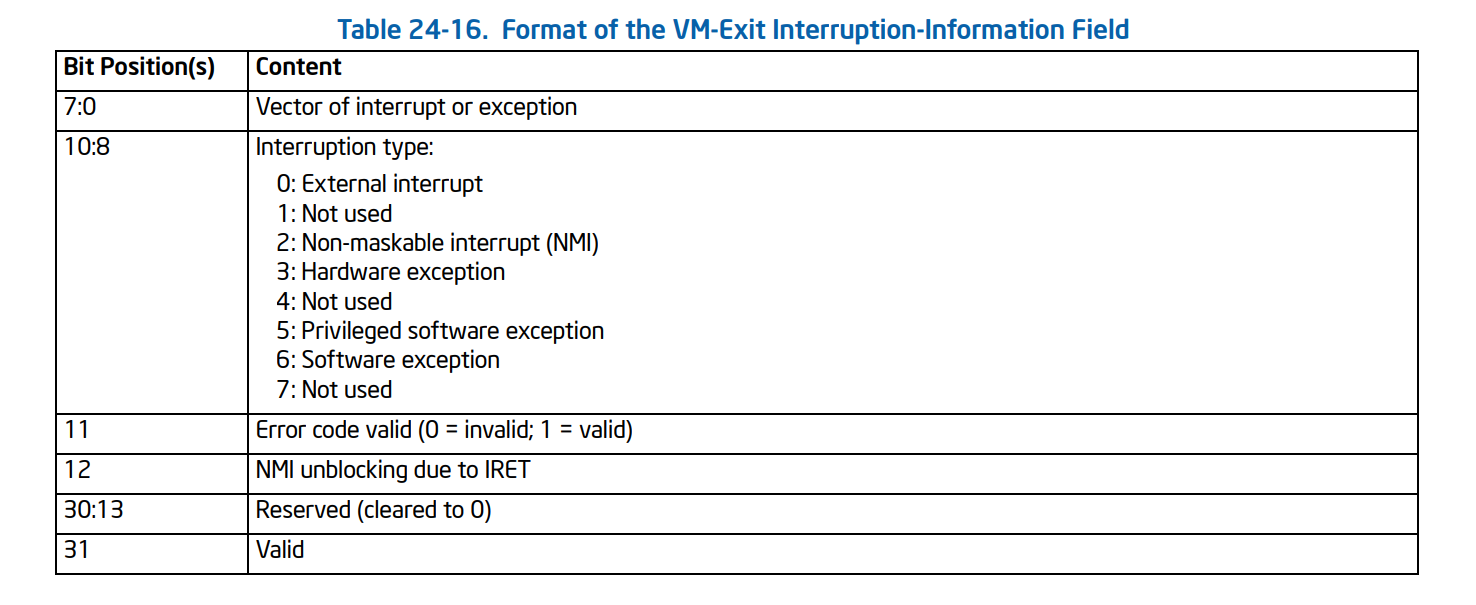

The following VMCS fields report us about the event,

- VM_EXIT_INTR_INFO

- VM_EXIT_INTR_ERROR_CODE

The following table shows how we can use VM_EXIT_INTR_INFO.

Which is the following structure:

1

2

3

4

5

6

7

8

9

10

11

typedef union _VMEXIT_INTERRUPT_INFO {

struct {

UINT32 Vector : 8;

UINT32 InterruptionType : 3;

UINT32 ErrorCodeValid : 1;

UINT32 NmiUnblocking : 1;

UINT32 Reserved : 18;

UINT32 Valid : 1;

};

UINT32 Flags;

}VMEXIT_INTERRUPT_INFO, * PVMEXIT_INTERRUPT_INFO;

And we can read the details using vmread instruction, for example, the following command shows how we can detect if breakpoint (0xcc) occurred.

1

2

3

4

5

6

7

// read the exit reason

__vmx_vmread(VM_EXIT_INTR_INFO, &InterruptExit);

if (InterruptExit.InterruptionType == INTERRUPT_TYPE_SOFTWARE_EXCEPTION && InterruptExit.Vector == EXCEPTION_VECTOR_BREAKPOINT)

{

// Do whatever , e.g re-inject the breakpoint

}

If we want to re-inject an exception that comes with an error code (see the above table), then the error code can be read using VM_EXIT_INTR_ERROR_CODE in VMCS. After that, write the error code to VM_ENTRY_EXCEPTION_ERROR_CODE and enable the deliver-error-code of VM_ENTRY_INTR_INFO to make sure that re-injection is without any flaw.

Also, keep in mind that page-fault is treated differently you can read Intel SDM for more information.

But wait! Have you notice that exception bitmap are just a 32-bit field in VMCS while we have up to 256 interrupts in IDT ?!

If you’re curious about this question you can read its answer in Discussion section.

Monitor Trap Flag (MTF)

Monitor Trap Flag or MTF is a feature that works exactly like Trap Flag in r/eflags except it’s invisible to the guest.

Whenever you set this flag on CPU_BASED_VM_EXEC_CONTROL, after VMRESUME, the processor executes one instruction then a vm-exit occurs.

We have to clear this flag otherwise each instruction cause a vm-exit.

The following function is responsible for setting and unsetting MTF.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

/* Set the monitor trap flag */

VOID HvSetMonitorTrapFlag(BOOLEAN Set)

{

ULONG CpuBasedVmExecControls = 0;

// Read the previous flag

__vmx_vmread(CPU_BASED_VM_EXEC_CONTROL, &CpuBasedVmExecControls);

if (Set) {

CpuBasedVmExecControls |= CPU_BASED_MONITOR_TRAP_FLAG;

}

else {

CpuBasedVmExecControls &= ~CPU_BASED_MONITOR_TRAP_FLAG;

}

// Set the new value

__vmx_vmwrite(CPU_BASED_VM_EXEC_CONTROL, CpuBasedVmExecControls);

}

Setting MTF leads to a vm-exit with exit reason (EXIT_REASON_MONITOR_TRAP_FLAG), we unset the MTF in the vm-exit handler.

MTF is essential in implementing hidden hooks, more details about MtfEptHookRestorePoint later in the hidden hooks section.

Here’s the MTF vm-exit handler.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

case EXIT_REASON_MONITOR_TRAP_FLAG:

{

/* Monitor Trap Flag */

if (GuestState[CurrentProcessorIndex].MtfEptHookRestorePoint)

{

// Restore the previous state

EptHandleMonitorTrapFlag(GuestState[CurrentProcessorIndex].MtfEptHookRestorePoint);

// Set it to NULL

GuestState[CurrentProcessorIndex].MtfEptHookRestorePoint = NULL;

}

else

{

LogError("Why MTF occured ?!");

}

// Redo the instruction

GuestState[CurrentProcessorIndex].IncrementRip = FALSE;

// We don't need MTF anymore

HvSetMonitorTrapFlag(FALSE);

break;

}

Hidden Hooks

(Simulating Hardware Debug Registers Without Any Limitation)

Have you ever used hardware debugger registers ?!

The debug registers allow researchers and programmers to selectively enable various debug conditions (read, write, execute) associated with a set of four debug addresses without any change in program instructions.

As you know, we can set up to 4 locations to these hardware registers, and it’s the worst limitation for these registers.

so what if we have a structure (let say _EPROCESS) and we want to see what function in Windows Read or Write in this structure?

It’s not possible with current debug registers but we use EPT to rescue !

Hidden Hooks Scenarios for Read/Write and Execute

We have two strategies for hidden hooks, one for Read/Write and one for Execute.

For Read/Write,

we unset read or write or both (based on how user wants) in the entry corresponding to the address.

This means before read or write a vm-exit occurs, and an EPT Violation will notify us. In the EPT Violation handler, we log the address that tries to read or write, then we find the entry in EPT table and set both read and write (means that any read or write to the page is allowed) and also set an MTF flag.

VMM resumes, and one instruction executes, or in other words, read or write is performed, then an MTF vm-exit occurs. In MTF vm-exit handler, we unset the read and write access again so any future access to that page will cause an EPT Violation.

Note that all of the above scenarios happen to one core. Each core has a separate TLB and separate Monitor Trap Flag.

For Execute,

For execution, we use a capability in Intel processors called execute-only.

Execute-only means that we can have a page with execute access enabled while read and write access is disabled.

If the user wants an execution hook, then we find the entry in EPT Table and unset read and write access and set the execute access. Then we create a copy from the original page (Page A) to somewhere else (Page B) and modify the copied page (Page B) with an absolute jump to the hook function.

Now, each time that any instruction attempted to execute our function, the absolute jump is performed, and our hook function is called. Each time any instruction tries to read or write to that location, an EPT Violation occurs as we unset read and write access to that page, so we can swap the original page (Page A) and also set the monitor trap flag to restore the hook after executing one instruction.

Wasn’t it easy ? Review it one more time if you didn’t understand.

You can also think about the different methods; for example, DdiMon creates a copy from that page and modifies the hook location by replacing one bytes (0xcc) breakpoint there. Now it intercepts each breakpoint (using Exception Bitmap) and swaps the original page. This method is much simpler to implement and more reliable, but it causes vm-exit for each hook, so it’s slower, but the first method for EPT Hooks never causes a vm-exit for execution.

Vm-exits for Read and Write hooks are unavoidable.

The execution hook for this part is derived from Gbps hv.

Let’s dig into implementation.

Implementing Hidden Hooks

For hooking functions, first, we split the page into 4KB entries, as described in the previous part. Then find the entry and read that entry. We want to save the details of a hooked page so we can use it later. For read/write hooks, we unset read or write or both, while for executing hooks, we unset read/write access and set execute access and also copy the page contents into a new page and swap the entry’s physical address with the second page’s physical address (fake page’s physical address).

Then we build a trampoline (explained later) and finally decide how to invalidate the TLB based on vmx-state (vmx-root or vmx non-root) and finally add the hook details to the HookedPagesList.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

/* This function returns false in VMX Non-Root Mode if the VM is already initialized

This function have to be called through a VMCALL in VMX Root Mode */

BOOLEAN EptPerformPageHook(PVOID TargetAddress, PVOID HookFunction, PVOID* OrigFunction, BOOLEAN UnsetRead, BOOLEAN UnsetWrite, BOOLEAN UnsetExecute) {

EPT_PML1_ENTRY ChangedEntry;

INVEPT_DESCRIPTOR Descriptor;

SIZE_T PhysicalAddress;

PVOID VirtualTarget;

PVOID TargetBuffer;

PEPT_PML1_ENTRY TargetPage;

PEPT_HOOKED_PAGE_DETAIL HookedPage;

ULONG LogicalCoreIndex;

// Check whether we are in VMX Root Mode or Not

LogicalCoreIndex = KeGetCurrentProcessorIndex();

if (GuestState[LogicalCoreIndex].IsOnVmxRootMode && !GuestState[LogicalCoreIndex].HasLaunched)

{

return FALSE;

}

/* Translate the page from a physical address to virtual so we can read its memory.

* This function will return NULL if the physical address was not already mapped in

* virtual memory.

*/

VirtualTarget = PAGE_ALIGN(TargetAddress);

PhysicalAddress = (SIZE_T)VirtualAddressToPhysicalAddress(VirtualTarget);

if (!PhysicalAddress)

{

LogError("Target address could not be mapped to physical memory");

return FALSE;

}

// Set target buffer, request buffer from pool manager , we also need to allocate new page to replace the current page ASAP

TargetBuffer = PoolManagerRequestPool(SPLIT_2MB_PAGING_TO_4KB_PAGE, TRUE, sizeof(VMM_EPT_DYNAMIC_SPLIT));

if (!TargetBuffer)

{

LogError("There is no pre-allocated buffer available");

return FALSE;

}

if (!EptSplitLargePage(EptState->EptPageTable, TargetBuffer, PhysicalAddress, LogicalCoreIndex))

{

LogError("Could not split page for the address : 0x%llx", PhysicalAddress);

return FALSE;

}

// Pointer to the page entry in the page table.

TargetPage = EptGetPml1Entry(EptState->EptPageTable, PhysicalAddress);

// Ensure the target is valid.

if (!TargetPage)

{

LogError("Failed to get PML1 entry of the target address");

return FALSE;

}

// Save the original permissions of the page

ChangedEntry = *TargetPage;

/* Execution is treated differently */

if (UnsetRead)

ChangedEntry.ReadAccess = 0;

else

ChangedEntry.ReadAccess = 1;

if (UnsetWrite)

ChangedEntry.WriteAccess = 0;

else

ChangedEntry.WriteAccess = 1;

/* Save the detail of hooked page to keep track of it */

HookedPage = PoolManagerRequestPool(TRACKING_HOOKED_PAGES, TRUE, sizeof(EPT_HOOKED_PAGE_DETAIL));

if (!HookedPage)

{

LogError("There is no pre-allocated pool for saving hooked page details");

return FALSE;

}

// Save the virtual address

HookedPage->VirtualAddress = TargetAddress;

// Save the physical address

HookedPage->PhysicalBaseAddress = PhysicalAddress;

// Fake page content physical address

HookedPage->PhysicalBaseAddressOfFakePageContents = (SIZE_T)VirtualAddressToPhysicalAddress(&HookedPage->FakePageContents[0]) / PAGE_SIZE;

// Save the entry address

HookedPage->EntryAddress = TargetPage;

// Save the orginal entry

HookedPage->OriginalEntry = *TargetPage;

// If it's Execution hook then we have to set extra fields

if (UnsetExecute)

{

// Show that entry has hidden hooks for execution

HookedPage->IsExecutionHook = TRUE;

// In execution hook, we have to make sure to unset read, write because

// an EPT violation should occur for these cases and we can swap the original page

ChangedEntry.ReadAccess = 0;

ChangedEntry.WriteAccess = 0;

ChangedEntry.ExecuteAccess = 1;

// Also set the current pfn to fake page

ChangedEntry.PageFrameNumber = HookedPage->PhysicalBaseAddressOfFakePageContents;

// Copy the content to the fake page

RtlCopyBytes(&HookedPage->FakePageContents, VirtualTarget, PAGE_SIZE);

// Create Hook

if (!EptHookInstructionMemory(HookedPage, TargetAddress, HookFunction, OrigFunction))

{

LogError("Could not build the hook.");

return FALSE;

}

}

// Save the modified entry

HookedPage->ChangedEntry = ChangedEntry;

// Add it to the list

InsertHeadList(&EptState->HookedPagesList, &(HookedPage->PageHookList));

/***********************************************************/

// if not launched, there is no need to modify it on a safe environment

if (!GuestState[LogicalCoreIndex].HasLaunched)

{

// Apply the hook to EPT

TargetPage->Flags = ChangedEntry.Flags;

}

else

{

// Apply the hook to EPT

EptSetPML1AndInvalidateTLB(TargetPage, ChangedEntry, INVEPT_SINGLE_CONTEXT);

}

return TRUE;

}

Now we need a function that creates another page and patches the original page (Page A) with an absolute jump (trampoline) that jumps another page (Page B).

In (Page B) we will jump to the hooked function also this function copies the bytes that are patched to the (Page B) and save the original function for the caller to return back to the original page on (Page B).

This is a simple inline hook that we use LDE (LDE64x64) as the detour function.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

BOOLEAN EptHookInstructionMemory(PEPT_HOOKED_PAGE_DETAIL Hook, PVOID TargetFunction, PVOID HookFunction, PVOID* OrigFunction)

{

SIZE_T SizeOfHookedInstructions;

SIZE_T OffsetIntoPage;

OffsetIntoPage = ADDRMASK_EPT_PML1_OFFSET((SIZE_T)TargetFunction);

LogInfo("OffsetIntoPage: 0x%llx", OffsetIntoPage);

if ((OffsetIntoPage + 13) > PAGE_SIZE - 1)

{

LogError("Function extends past a page boundary. We just don't have the technology to solve this.....");

return FALSE;

}

/* Determine the number of instructions necessary to overwrite using Length Disassembler Engine */

for (SizeOfHookedInstructions = 0;

SizeOfHookedInstructions < 13;

SizeOfHookedInstructions += LDE(TargetFunction, 64))

{

// Get the full size of instructions necessary to copy

}

LogInfo("Number of bytes of instruction mem: %d", SizeOfHookedInstructions);

/* Build a trampoline */

/* Allocate some executable memory for the trampoline */

Hook->Trampoline = PoolManagerRequestPool(EXEC_TRAMPOLINE, TRUE, MAX_EXEC_TRAMPOLINE_SIZE);

if (!Hook->Trampoline)

{

LogError("Could not allocate trampoline function buffer.");

return FALSE;

}

/* Copy the trampoline instructions in. */

RtlCopyMemory(Hook->Trampoline, TargetFunction, SizeOfHookedInstructions);

/* Add the absolute jump back to the original function. */

EptHookWriteAbsoluteJump(&Hook->Trampoline[SizeOfHookedInstructions], (SIZE_T)TargetFunction + SizeOfHookedInstructions);

LogInfo("Trampoline: 0x%llx", Hook->Trampoline);

LogInfo("HookFunction: 0x%llx", HookFunction);

/* Let the hook function call the original function */

*OrigFunction = Hook->Trampoline;

/* Write the absolute jump to our shadow page memory to jump to our hook. */

EptHookWriteAbsoluteJump(&Hook->FakePageContents[OffsetIntoPage], (SIZE_T)HookFunction);

return TRUE;

}

For creating a simple absolute jump we use the following function.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

/* Write an absolute x64 jump to an arbitrary address to a buffer. */

VOID EptHookWriteAbsoluteJump(PCHAR TargetBuffer, SIZE_T TargetAddress)

{

/* mov r15, Target */

TargetBuffer[0] = 0x49;

TargetBuffer[1] = 0xBB;

/* Target */

*((PSIZE_T)&TargetBuffer[2]) = TargetAddress;

/* push r15 */

TargetBuffer[10] = 0x41;

TargetBuffer[11] = 0x53;

/* ret */

TargetBuffer[12] = 0xC3;

}

In the case of EPT Violations, first, we find the details of the physical address that caused this vm-exit. Then we call EptHandleHookedPage to create a log about the details then we set an MTF to restore to the hooked state after executing one instruction.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

/* Check if this exit is due to a violation caused by a currently hooked page. Returns FALSE

* if the violation was not due to a page hook.

*

* If the memory access attempt was RW and the page was marked executable, the page is swapped with

* the original page.

*

* If the memory access attempt was execute and the page was marked not executable, the page is swapped with

* the hooked page.

*/

BOOLEAN EptHandlePageHookExit(VMX_EXIT_QUALIFICATION_EPT_VIOLATION ViolationQualification, UINT64 GuestPhysicalAddr)

{

BOOLEAN IsHandled = FALSE;

PLIST_ENTRY TempList = 0;

TempList = &EptState->HookedPagesList;

while (&EptState->HookedPagesList != TempList->Flink)

{

TempList = TempList->Flink;

PEPT_HOOKED_PAGE_DETAIL HookedEntry = CONTAINING_RECORD(TempList, EPT_HOOKED_PAGE_DETAIL, PageHookList);

if (HookedEntry->PhysicalBaseAddress == PAGE_ALIGN(GuestPhysicalAddr))

{

/* We found an address that match the details */

/*

Returning true means that the caller should return to the ept state to the previous state when this instruction is executed

by setting the Monitor Trap Flag. Return false means that nothing special for the caller to do

*/

if (EptHandleHookedPage(HookedEntry, ViolationQualification, GuestPhysicalAddr))

{

// Next we have to save the current hooked entry to restore on the next instruction's vm-exit

GuestState[KeGetCurrentProcessorNumber()].MtfEptHookRestorePoint = HookedEntry;

// We have to set Monitor trap flag and give it the HookedEntry to work with

HvSetMonitorTrapFlag(TRUE);

}

// Indicate that we handled the ept violation

IsHandled = TRUE;

// Get out of the loop

break;

}

}

// Redo the instruction

GuestState[KeGetCurrentProcessorNumber()].IncrementRip = FALSE;

return IsHandled;

}

Each time an EPT Violation occurs, we check whether it was because Read Access or Write Access or Execute Access violation and log GUEST_RIP, then we restore the initial flags (All read, write, and exec is allowed).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

BOOLEAN EptHandleHookedPage(EPT_HOOKED_PAGE_DETAIL* HookedEntryDetails, VMX_EXIT_QUALIFICATION_EPT_VIOLATION ViolationQualification, SIZE_T PhysicalAddress) {

ULONG64 GuestRip;

ULONG64 ExactAccessedAddress;

ULONG64 AlignedVirtualAddress;

ULONG64 AlignedPhysicalAddress;

// Get alignment

AlignedVirtualAddress = PAGE_ALIGN(HookedEntryDetails->VirtualAddress);

AlignedPhysicalAddress = PAGE_ALIGN(PhysicalAddress);

// Let's read the exact address that was accesses

ExactAccessedAddress = AlignedVirtualAddress + PhysicalAddress - AlignedPhysicalAddress;

// Reading guest's RIP

__vmx_vmread(GUEST_RIP, &GuestRip);

if (!ViolationQualification.EptExecutable && ViolationQualification.ExecuteAccess)

{

LogInfo("Guest RIP : 0x%llx tries to execute the page at : 0x%llx", GuestRip, ExactAccessedAddress);

}

else if (!ViolationQualification.EptWriteable && ViolationQualification.WriteAccess)

{

LogInfo("Guest RIP : 0x%llx tries to write on the page at :0x%llx", GuestRip, ExactAccessedAddress);

}

else if (!ViolationQualification.EptReadable && ViolationQualification.ReadAccess)

{

LogInfo("Guest RIP : 0x%llx tries to read the page at :0x%llx", GuestRip, ExactAccessedAddress);

}

else

{

// there was an unexpected ept violation

return FALSE;

}

EptSetPML1AndInvalidateTLB(HookedEntryDetails->EntryAddress, HookedEntryDetails->OriginalEntry, INVEPT_SINGLE_CONTEXT);

// Means that restore the Entry to the previous state after current instruction executed in the guest

return TRUE;

}

That’s it! We have a working hidden hooks.

Removing Hooks From Pages

Removing hooks from pages are essential to us because of two reasons; first, sometimes we need to disable the hooks, and second, when we want to turn off hypervisor, we have to remove all the hooks. Otherwise, we might encounter strange behavior.

Removing hooks is simple as we saved details, including original entries in PageHookList; we have to find entries in this list and broadcast to all processors to update their TLBs and also remove that entry.

The following function is for this purpose.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

/* Remove single hook from the hooked pages list and invalidate TLB */

BOOLEAN HvPerformPageUnHookSinglePage(UINT64 VirtualAddress) {

PLIST_ENTRY TempList = 0;

SIZE_T PhysicalAddress;

PhysicalAddress = PAGE_ALIGN(VirtualAddressToPhysicalAddress(VirtualAddress));

// Should be called from vmx non-root

if (GuestState[KeGetCurrentProcessorNumber()].IsOnVmxRootMode)

{

return FALSE;

}

TempList = &EptState->HookedPagesList;

while (&EptState->HookedPagesList != TempList->Flink)

{

TempList = TempList->Flink;

PEPT_HOOKED_PAGE_DETAIL HookedEntry = CONTAINING_RECORD(TempList, EPT_HOOKED_PAGE_DETAIL, PageHookList);

if (HookedEntry->PhysicalBaseAddress == PhysicalAddress)

{

// Remove it in all the cores

KeGenericCallDpc(HvDpcBroadcastRemoveHookAndInvalidateSingleEntry, HookedEntry->PhysicalBaseAddress);

// remove the entry from the list

RemoveEntryList(HookedEntry->PageHookList.Flink);

return TRUE;

}

}

// Nothing found , probably the list is not found

return FALSE;

}

In vmx-root, we also search for the specific hook and use EptSetPML1AndInvalidateTLB to return that entry to the initial state, which is previously saved in OriginalEntry.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

/* Remove and Invalidate Hook in TLB */

// Caution : This function won't remove entries from LIST_ENTRY, just invalidate the paging, use HvPerformPageUnHookSinglePage instead

BOOLEAN EptPageUnHookSinglePage(SIZE_T PhysicalAddress) {

PLIST_ENTRY TempList = 0;

// Should be called from vmx-root, for calling from vmx non-root use the corresponding VMCALL

if (!GuestState[KeGetCurrentProcessorNumber()].IsOnVmxRootMode)

{

return FALSE;

}

TempList = &EptState->HookedPagesList;

while (&EptState->HookedPagesList != TempList->Flink)

{

TempList = TempList->Flink;

PEPT_HOOKED_PAGE_DETAIL HookedEntry = CONTAINING_RECORD(TempList, EPT_HOOKED_PAGE_DETAIL, PageHookList);

if (HookedEntry->PhysicalBaseAddress == PAGE_ALIGN(PhysicalAddress))

{

// Undo the hook on the EPT table

EptSetPML1AndInvalidateTLB(HookedEntry->EntryAddress, HookedEntry->OriginalEntry, INVEPT_SINGLE_CONTEXT);

return TRUE;

}

}

// Nothing found , probably the list is not found

return FALSE;

}

If we want to unhook all the pages, then we use another VMCALL, there is no need to iterate through the list here as all of the hooks must be removed. Just broadcast it through all the cores.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

/* Remove all hooks from the hooked pages list and invalidate TLB */

// Should be called from Vmx Non-root

VOID HvPerformPageUnHookAllPages() {

// Should be called from vmx non-root

if (GuestState[KeGetCurrentProcessorNumber()].IsOnVmxRootMode)

{

return;

}

// Remove it in all the cores

KeGenericCallDpc(HvDpcBroadcastRemoveHookAndInvalidateAllEntries, 0x0);

// No need to remove the list as it will automatically remove by the pool uninitializer

}

In vmx-root we just iterate through the list and restore them to the initial state.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

/* Remove and Invalidate Hook in TLB */

// Caution : This function won't remove entries from LIST_ENTRY, just invalidate the paging, use HvPerformPageUnHookAllPages instead

VOID EptPageUnHookAllPages() {

PLIST_ENTRY TempList = 0;

// Should be called from vmx-root, for calling from vmx non-root use the corresponding VMCALL

if (!GuestState[KeGetCurrentProcessorNumber()].IsOnVmxRootMode)

{

return FALSE;

}

TempList = &EptState->HookedPagesList;

while (&EptState->HookedPagesList != TempList->Flink)

{

TempList = TempList->Flink;

PEPT_HOOKED_PAGE_DETAIL HookedEntry = CONTAINING_RECORD(TempList, EPT_HOOKED_PAGE_DETAIL, PageHookList);

// Undo the hook on the EPT table

EptSetPML1AndInvalidateTLB(HookedEntry->EntryAddress, HookedEntry->OriginalEntry, INVEPT_SINGLE_CONTEXT);

}

}

An Important Note When Modifying EPT Entries

One interesting thing that I encountered during the test of my driver on the multi-core system was the fact that EPT entries should be modified in one instruction.

For example, if you change the access bits of an EPT entry, bit by bit, then you probably get the error (EPT Misconfiguration) that one access bits changed and before the next access bit applies another core tries to access page table and it sometimes leads to an EPT Misconfiguration and sometimes you might not get the desired behavior.

For example the following method for modifying EPT entries is wrong!

1

2

3

HookedEntryDetails->EntryAddress->ExecuteAccess = 1;

HookedEntryDetails->EntryAddress->WriteAccess = 1;

HookedEntryDetails->EntryAddress->ReadAccess = 1;

But the following code is correct. (Applying changes in one instruction instantly).

1

2

// Apply the hook to EPT

TargetPage->Flags = OriginalEntry.Flags;

This is why we have the following function that acquires a spinlock that makes sure that only one entry is modified once and then invalidate that core’s TLB.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

/* This function set the specific PML1 entry in a spinlock protected area then invalidate the TLB ,

this function should be called from vmx root-mode

*/

VOID EptSetPML1AndInvalidateTLB(PEPT_PML1_ENTRY EntryAddress, EPT_PML1_ENTRY EntryValue, INVEPT_TYPE InvalidationType)

{

// acquire the lock

SpinlockLock(&Pml1ModificationAndInvalidationLock);

// set the value

EntryAddress->Flags = EntryValue.Flags;

// invalidate the cache

if (InvalidationType == INVEPT_SINGLE_CONTEXT)

{

InveptSingleContext(EptState->EptPointer.Flags);

}

else

{

InveptAllContexts();

}

// release the lock

SpinlockUnlock(&Pml1ModificationAndInvalidationLock);

}

The above function solves the problems of simultaneously modifying the EPT Table as we have one EPT Table for all cores.

System-Call Hook

When it comes to hypervisors, we have different options for hooking system-calls. Each of these methods has its own advantages and disadvantages.

Let’s review some of the methods, that we can use to hook system-calls.

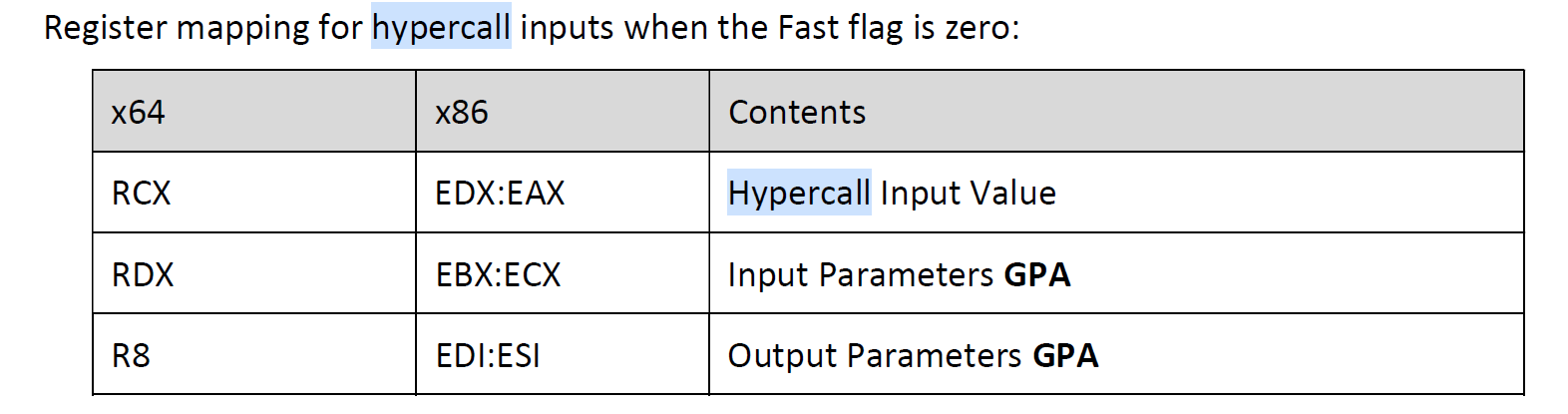

The first method is hooking MSR 0xc0000082 (LSTAR). This MSR is the kernel-entry for dispatching system-calls. Each time an instruction like Syscall is executed in user-mode, the processor automatically switches to kernel-mode and runs the address stored in this MSR. In Windows address of KiSystemCall64 is stored in this MSR.

This means that each time an application needs to call a system-call, it executes a syscall, and now this function is responsible for finding the entries in SSDT and call. In short, SSDT is a table in Windows that stores pointer to Windows function based on a system-call number. All SSDT entries and LSTAR MSR is under the control of PatchGuard.

This brings us three possibilities!

First, we can change the MSR LSTAR to point to our custom function, and to make it PatchGuard compatible, we can set MSR Bitmap that if any kernel routine wants to read this MSR, then a vm-exit occurs so we can change the result. Instead of showing our custom handler, we can show the KiSystemCall64, and PatchGuard will never know that this is a fake MSR.

Hooking MSR LSTAR is complicated, and updates to Meltdown make it even more complicated. In a post-meltdown system, LSTAR points to KiSystemCall64Shadow, which involves changing CR3 and execute KPTI-related instruction and Meltdown mitigation. It’s not a good idea to hook LSTAR as we have difficulties with pre-Meltdown and post-Meltdown mitigations and also as the system-state changes in this MSR so we can’t hook anything in the kernel as the kernel is not mapped on CR3.

Hyperbone uses this method (even it not updated for post-meltdown systems in the time of writing this article).

The second option is finding SSDT tables and change their entry to point to our custom functions, each time the PatchGuard tries to audit these entries, we can show it the not-patched listings. The only thing that we should keep in mind is to find where KiSystemCall64 tries to read that location and save that location somewhere so we can know that if the function that tries to read is syscall dispatcher our other functions (and probably PatchGuard).

Implementing this method is not super-fast as we need to unset EPT Read for SSDT entry, and each time a read happens, a vm-exit occurs, so we have one vm-exit for each syscall thus it makes our computer slow!

The third option is finding functions in SSDT entries and put a hidden hook on the functions that we need to hook. This way, we can catch a custom list of functions because I think hooking all system-calls is stupid!

We implement the third option in this part.

Another possible way is Syscall Hooking Via Extended Feature Enable Register (EFER), as described here. This method is based on disabling Syscall Enable (or SCE bit) of the EFER MSR; hence each time a Syscall is executed, a #UD exception is generated by the processor, and we can intercept #UD by using Exception Bitmap (described above) to handle these syscalls.

Again it’s not a good idea because it leads to a vm-exit for each syscall; thus, it’s substantially slow but usable for experimental purposes.

Also, they might be other options. Don’t hesitate to send a comment to this post and describe if you know one!

Finding Kernel Base

To find SSDT, we need to find nt!KeServiceDescriptorTable and nt!KeServiceDescriptorTableShadow, these tables are exported in x86 systems but not in x64. This makes the things much complicated as the routines to find these tables might change in future versions of Windows; thus, our Syscall hooker might have problems in future versions.

First of all, we need to find the base address of ntoskrnl, and it’s the image size, this is done by using ZwQuerySystemInformation, first, we find this function by using MmGetSystemRoutineAddress.

Then we allocate a memory to get the details from Windows and find the base address and module size.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

/* Get the kernel base and Image size */

PVOID SyscallHookGetKernelBase(PULONG pImageSize)

{

NTSTATUS status;

ZWQUERYSYSTEMINFORMATION ZwQSI = 0;

UNICODE_STRING routineName;

PVOID pModuleBase = NULL;

PSYSTEM_MODULE_INFORMATION pSystemInfoBuffer = NULL;

ULONG SystemInfoBufferSize = 0;

RtlInitUnicodeString(&routineName, L"ZwQuerySystemInformation");

ZwQSI = (ZWQUERYSYSTEMINFORMATION)MmGetSystemRoutineAddress(&routineName);

if (!ZwQSI)

return NULL;

status = ZwQSI(SystemModuleInformation,

&SystemInfoBufferSize,

0,

&SystemInfoBufferSize);

if (!SystemInfoBufferSize)

{

LogError("ZwQuerySystemInformation (1) failed");

return NULL;

}

pSystemInfoBuffer = (PSYSTEM_MODULE_INFORMATION)ExAllocatePool(NonPagedPool, SystemInfoBufferSize * 2);

if (!pSystemInfoBuffer)

{

LogError("ExAllocatePool failed");

return NULL;

}

memset(pSystemInfoBuffer, 0, SystemInfoBufferSize * 2);

status = ZwQSI(SystemModuleInformation,

pSystemInfoBuffer,

SystemInfoBufferSize * 2,

&SystemInfoBufferSize);

if (NT_SUCCESS(status))

{

pModuleBase = pSystemInfoBuffer->Module[0].ImageBase;

if (pImageSize)

*pImageSize = pSystemInfoBuffer->Module[0].ImageSize;

}

else {

LogError("ZwQuerySystemInformation (2) failed");

return NULL;

}

ExFreePool(pSystemInfoBuffer);

return pModuleBase;

}

Update 2: You can also use RtlPcToFileHeader instead of above method:

1

RtlPcToFileHeader(&RtlPcToFileHeader, &NtoskrnlBase);

Finding SSDT and Shadow SSDT Tables

Now that we have the base address ntoskrnl we can search for this pattern to find nt!KeServiceDescriptorTableShadow.

1

const unsigned char KiSystemServiceStartPattern[] = { 0x8B, 0xF8, 0xC1, 0xEF, 0x07, 0x83, 0xE7, 0x20, 0x25, 0xFF, 0x0F, 0x00, 0x00 };

nt!KeServiceDescriptorTableShadow contains the nt!KiServiceTable and win32k!W32pServiceTable, which is the SSDT of Syscall function for both NT Syscalls and Win32K Syscalls.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

/* Find SSDT address of Nt fucntions and W32Table */

BOOLEAN SyscallHookFindSsdt(PUINT64 NtTable, PUINT64 Win32kTable)

{

ULONG kernelSize = 0;

ULONG_PTR kernelBase;

const unsigned char KiSystemServiceStartPattern[] = { 0x8B, 0xF8, 0xC1, 0xEF, 0x07, 0x83, 0xE7, 0x20, 0x25, 0xFF, 0x0F, 0x00, 0x00 };

const ULONG signatureSize = sizeof(KiSystemServiceStartPattern);

BOOLEAN found = FALSE;

LONG relativeOffset = 0;

ULONG_PTR addressAfterPattern;

ULONG_PTR address;

SSDTStruct* shadow;

PVOID ntTable;

PVOID win32kTable;

//x64 code

kernelBase = (ULONG_PTR)SyscallHookGetKernelBase(&kernelSize);

if (kernelBase == 0 || kernelSize == 0)

return FALSE;

// Find KiSystemServiceStart

ULONG KiSSSOffset;

for (KiSSSOffset = 0; KiSSSOffset < kernelSize - signatureSize; KiSSSOffset++)

{

if (RtlCompareMemory(((unsigned char*)kernelBase + KiSSSOffset), KiSystemServiceStartPattern, signatureSize) == signatureSize)

{

found = TRUE;

break;

}

}

if (!found)

return FALSE;

addressAfterPattern = kernelBase + KiSSSOffset + signatureSize;

address = addressAfterPattern + 7; // Skip lea r10,[nt!KeServiceDescriptorTable]

// lea r11, KeServiceDescriptorTableShadow

if ((*(unsigned char*)address == 0x4c) &&

(*(unsigned char*)(address + 1) == 0x8d) &&

(*(unsigned char*)(address + 2) == 0x1d))

{

relativeOffset = *(LONG*)(address + 3);

}

if (relativeOffset == 0)

return FALSE;

shadow = (SSDTStruct*)(address + relativeOffset + 7);

ntTable = (PVOID)shadow;

win32kTable = (PVOID)((ULONG_PTR)shadow + 0x20); // Offset showed in Windbg

*NtTable = ntTable;

*Win32kTable = win32kTable;

return TRUE;

}

Note that nt!KeServiceDescriptorTable only contains the nt!KiServiceTable, and it doesn’t provide win32k!W32pServiceTable.

Get Routine Address by Syscall Number

After finding the NT Syscall Table and Win32k Syscall Table, now it’s time to translate Syscall Numbers to its corresponding address.

The following formula converts API Number to function address.

1

((SSDT->pServiceTable[ApiNumber] >> 4) + SSDTbase);

Keep in mind that NT Syscalls start from 0x0, but Win32k Syscalls start from 0x1000, so as we computer indexes based on the start of the table, we should minus the Win32k Syscalls with 0x1000.

All in all, we have the following function.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

/* Find entry from SSDT table of Nt fucntions and W32Table syscalls */

PVOID SyscallHookGetFunctionAddress(INT32 ApiNumber, BOOLEAN GetFromWin32k)

{

SSDTStruct* SSDT;

BOOLEAN Result;

ULONG_PTR SSDTbase;

ULONG ReadOffset;

UINT64 NtTable, Win32kTable;

// Read the address og SSDT

Result = SyscallHookFindSsdt(&NtTable, &Win32kTable);

if (!Result)

{

LogError("SSDT not found");

return 0;

}

if (!GetFromWin32k)

{

SSDT = NtTable;

}

else

{

// Win32k APIs start from 0x1000

ApiNumber = ApiNumber - 0x1000;

SSDT = Win32kTable;

}

SSDTbase = (ULONG_PTR)SSDT->pServiceTable;

if (!SSDTbase)

{

LogError("ServiceTable not found");

return 0;

}

return (PVOID)((SSDT->pServiceTable[ApiNumber] >> 4) + SSDTbase);

}

Now that we have the address of the routine that we want, now it’s time to put a hidden hook on that function, we also need their functions prototypes so we can read their arguments appropriately.

The syscall hook example is demonstrated later in the (How to test?) section.

Virtual Processor ID (VPID) & TLB

In Intel, its explanation about VPIDs is vague, so I found a great link that explains is so much more straightforward; hence it’s better to read the details below instead of starting with SDM.

The translation lookaside buffer (TLB) is a high-speed memory page cache for virtual to physical address translation. It follows the local principle to avoid time-consuming lookups for recently used pages.

Host mappings are not coherent to the guest and vice versa. Each guest has it’s own address space, the mapping table cannot be re-used in another guest (or host). Therefore first-generation VMs like Intel Core 2 (VMX) flush the TLB on each VM-enter (resume) and VM-exit. But flushing the TLB is a show-stopper, it is one of the most critical components in a modern CPU.

Intel engineers started to think about that. Intel Nehalem TLB entries have changed by introducing a Virtual Processor ID. So each TLB entry is tagged with this ID. The CPU does not specify VPIDs, the hypervisor allocates them, whereas the host VPID is 0. Starting with Intel Nehalem, the TLB must not be flushed. When a process tries to access a mapping where the actual VPID does not match with the TLB entry VPID a standard TLB miss occurs. Some Intel numbers show that the latency performance gain is 40% for a VM round trip transition compared to Meron, an Intel Core 2.

Imagine you have two or more VMs:

- If you enable VPIDs, you don’t have to worry that VM1 accidentally, fetches cached memory of VM2 (or even hypervisor itself)

- If you don’t enable VPIDs, CPU assigns VPID=0 to all operations (VMX root & VMX non-root) and flushes TLB on each transition for you

A logical processor may tag some cached information with a 16-bit VPID.

The VPID is 0000H in the following situations:

- Outside VMX operation. (e.g System Management Mode (SMM)).

- VMX root operation

- VMX non-root operation when the “enable VPID” VM-execution control is 0

INVVPID - Invalidate Translations Based on VPID

In order to support VPIDs, we have to add CPU_BASED_CTL2_ENABLE_VPID to Secondary Processor-Based VM-Execution Controls.

The next step is to set a 16-bit value to VMCS’s VIRTUAL_PROCESSOR_ID field using VMWRITE instruction. This value is used as an index for the current VMCS on this core so our current VMCS’s VPID is 1.

Also, as described above, 0 has special meaning and should not be used.

1

2

3

4

5

6

// Set up VPID

/* For all processors, we will use a VPID = 1. This allows the processor to separate caching

of EPT structures away from the regular OS page translation tables in the TLB. */

__vmx_vmwrite(VIRTUAL_PROCESSOR_ID, 1);

INVVPID (instruction) invalidates mappings in the translation lookaside buffers (TLBs) and paging-structure caches based on the virtual processor identifier (VPID).

For the INVVPID there are 4 types that currently supported by the processors which are reported in the IA32_VMX_EPT_VPID_CAP MSR.

The enumeration for these types are :

1

2

3

4

5

6

7

typedef enum _INVVPID_ENUM

{

INDIVIDUAL_ADDRESS = 0x00000000,

SINGLE_CONTEXT = 0x00000001,

ALL_CONTEXT = 0x00000002,

SINGLE_CONTEXT_RETAINING_GLOBALS = 0x00000003

}INVVPID_ENUM, *PINVVPID_ENUM;

I’ll describe these types in detail later.

For the implementation of INVVPID we use an assembly function like this (which executes invvpid from the RCX and RDX for x64 fast calling convention) :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

AsmInvept PROC PUBLIC

invept rcx, oword ptr [rdx]

jz @jz

jc @jc

xor rax, rax

ret

@jz:

mov rax, VMX_ERROR_CODE_FAILED_WITH_STATUS

ret

@jc:

mov rax, VMX_ERROR_CODE_FAILED

ret

AsmInvept ENDP

and then, a general purpose function for calling this assembly function :

1

2

3

4

5

6

7

8

9

10

inline Invvpid(INVVPID_ENUM Type, INVVPID_DESCRIPTOR* Descriptor)

{

if (!Descriptor)

{

static INVVPID_DESCRIPTOR ZeroDescriptor = { 0 };

Descriptor = &ZeroDescriptor;

}

return AsmInvvpid(Type, Descriptor);

}

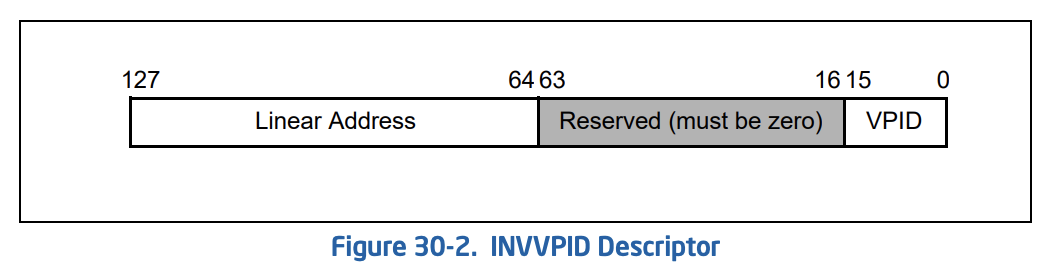

For INVVPID, there is a descriptor defined below.

This structure defined like this :

1

2

3

4

5

6

7

typedef struct _INVVPID_DESCRIPTOR

{

UINT64 VPID : 16;

UINT64 RESERVED : 48;

UINT64 LINEAR_ADDRESS;

} INVVPID_DESCRIPTOR, *PINVVPID_DESCRIPTOR;

The types of INVVPID is defined as below :

- Individual-address invalidation: If the INVVPID type is 0, the logical processor invalidates mappings for the linear address, and VPID specified in the INVVPID descriptor. In some cases, it may invalidate mappings for other linear addresses (or other VPIDs) as well.

1

2

3

4

5

inline InvvpidIndividualAddress(UINT16 Vpid, UINT64 LinearAddress)

{

INVVPID_DESCRIPTOR Descriptor = { Vpid, 0, LinearAddress };

return Invvpid(INDIVIDUAL_ADDRESS, &Descriptor);

}

- Single-context invalidation: If the INVVPID type is 1, the logical processor invalidates all mappings tagged with the VPID specified in the INVVPID descriptor. In some cases, it may invalidate mappings for other VPIDs as well.

1

2

3

4

5

inline InvvpidSingleContext(UINT16 Vpid)

{

INVVPID_DESCRIPTOR Descriptor = { Vpid, 0, 0 };

return Invvpid(SINGLE_CONTEXT, &Descriptor);

}

- All-contexts invalidation: If the INVVPID type is 2, the logical processor invalidates all mappings tagged with all VPIDs except VPID 0000H. In some cases, it may invalidate translations with VPID 0000H as well.

1

2

3

4

inline InvvpidAllContexts()

{

return Invvpid(ALL_CONTEXT, NULL);

}

- Single-context invalidation, retaining global translations: If the INVVPID type is 3, the logical processor invalidates all mappings tagged with the VPID specified in the INVVPID descriptor except global translations. In some cases, it may invalidate global translations (and mappings with other VPIDs) as well. See the “Caching Translation Information” section in Chapter 4 of the IA-32 Intel Architecture Software Developer’s Manual, Volumes 3A for information about global translations.

1

2

3

4

5

inline InvvpidSingleContextRetainingGlobals(UINT16 Vpid)

{

INVVPID_DESCRIPTOR Descriptor = { Vpid, 0, 0 };

return Invvpid(SINGLE_CONTEXT_RETAINING_GLOBALS, &Descriptor);

}

You probably think about how VPIDs can be used in the hypervisor. We can use it instead of INVEPT, but generally, it doesn’t have any particular usage for us. I described it more in the Discussion Section. By the way, VPIDs will be used in implementing special features as it’s more flexible than INVEPT and also when we have multiple VMCS (EPTP). (Can you think about some of them?).

Important Notes For Using VPIDs

There are some important things that you should know when using VPIDs.

Enabling VPIDs have a side-effect of not flushing TLB on VMEntry/VMExit. You should manually flush guest TLB entries if required (By using INVEPT/INVVPID). These issues might be hidden when VPID is disabled.

When VPID is disabled, VMEntry flushes the entire TLB. Thus, the hypervisor doesn’t need to explicitly invalidate TLB entries populated by the guest when performing an operation that should invalidate them (e.g., Modifying an EPT entry). When VPID is enabled, INVEPT/INVVPID should be used.

An easy way for you to find these kinds of issues is indeed the issue you have, is to execute INVEPT global-context before every VMEntry to flush entire TLB while still keeping VPID enabled. If it now works, you should check where you are missing an INVEPT execution.

In my experience, if you just enable VPIDs without any extra assumption, all processes start to crash one by one, and eventually, kernel crashes, and this is because we didn’t invalidate the TLB.

In order to solve the problem of crashing every process, we have to invalidate TLB in the case of Mov to Cr3 thus whenever a vm-exit occurs with reason == EXIT_REASON_CR_ACCESS (28) then if it’s a Mov to Cr3 we have to invalidate TLB (INVEPT or INVVPID [Look at the Update 1 for more details]).

So we edit the code like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

case TYPE_MOV_TO_CR:

{

switch (CrExitQualification->Fields.ControlRegister)

{

case 0:

__vmx_vmwrite(GUEST_CR0, *RegPtr);

__vmx_vmwrite(CR0_READ_SHADOW, *RegPtr);

break;

case 3:

__vmx_vmwrite(GUEST_CR3, (*RegPtr & ~(1ULL << 63)));

// InveptSingleContext(EptState->EptPointer.Flags); (changed, look for "Update 1" at the 8th part for more detail)

InvvpidSingleContext(VPID_TAG);

break;

case 4:

__vmx_vmwrite(GUEST_CR4, *RegPtr);

__vmx_vmwrite(CR4_READ_SHADOW, *RegPtr);

break;

default:

LogWarning("Unsupported register %d in handling control registers access", CrExitQualification->Fields.ControlRegister);

break;

}

}

Also, note that as we have a single EPTP for all cores then it’s enough to invalidate single-context otherwise we have to invalidate all-contexts.

Update 1 : As Satoshi Tanda mentioned,

The CR3 handler should use INVVPID instead of INVEPT because INVEPT invalidates more than needed. We want to invalid caches of GVA -> HPA (combined mappings), and both instructions do this. This is why INVEPT works too, but INVEPT also invalidates caches of GPA -> HPA (guest-physical mappings), which are not impacted by the guest CR3 change and can be kept without invalidation.

The general guideline is, INVVPID when TLB flush emulation is needed, and INVEPT when EPT entries are changed. You can find more info on those instructions and cache types in :

- 28.3.1 Information That May Be Cached

- 28.3.3.3 Guidelines for Use of the INVVPID Instruction.

so instead of InveptSingleContext we used InvvpidSingleContext.

Honestly, we have some misunderstanding about handling Cr3 vm-exits, even though the above code works fine, but generally, it has some performance penalties. I’ll explain these performance problems in the “Fixing Previous Design Issues” section.

You might also ask why we avoid writing the 63rd bit of the CR3.

1

__vmx_vmwrite(GUEST_CR3, (*RegPtr & ~(1ULL << 63)));

Bit 63 of CR3 is a new bit that is part of the PCID feature. It allows OS to change CR3 value without invalidating all TLB entries (tagged with the same EP4TA and VPID) besides those marked with global-bit.

EP4TA is the value of bits 51:12 of EPTP.

E.g. Windows KVA Shadowing and Linux KPTI signal this bit on CR3 mov that changes PCID between userspace PCID and kernel space PCID on user and kernel transitions.

We should not write on bit 63 of CR3 on mov reg, cr3 emulation because the processor does not write and attempt to write this will cause a crash on modern Win10.

INVVPID vs. INVPCID

INVPCID is not really relevant to hypervisor but in the case, if you want to know, INVPCID invalidates mappings in the translation lookaside buffers (TLBs) and paging-structure caches based on the process-context identifier (PCID).